Facebook’s Secret Policies On Sex, Terrorism, Hate, And Violence Leaked

Short Bytes: A set of internal Facebook files have been obtained and analyzed by The Guardian. The files describe the policies and guidelines issued by Facebook regarding moderation of various types of content such as violence, revenge porn, hate speech, etc. The inconsistent and peculiar nature of some guidelines might make Facebook a topic of criticism over ethics.

The Guardian was able to access the internal files which are supposed to belong to Facebook’s policy on issues such as violence, hate speech, terrorism, pornography, racism, and self-harm.It’s a known fact that Facebook has a human moderation team at their end to filter flagged content from the social network. But in reality, the limited team is incapable of efficiently handling the amount of content that appears in front of them. For instance, there are roughly ten seconds for the moderators to make a decision.

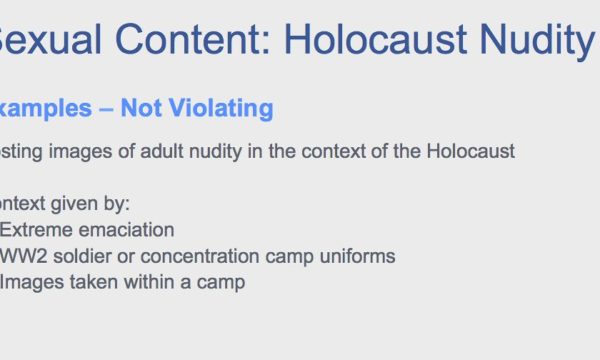

The Facebook Files seen by The Guardian describe the examples of what content should be blocked and up to what extent in the form of slides and pictures. For example, video involving violent death should be blocked altogether as they might help in creating awareness

For example, videos involving violent deaths should be deleted altogether but marked as “disturbing.” They might help in creating awareness of issues such as mental illness. In a similar way, live stream involving self-harming aren’t blocked because Facebook “doesn’t want to censor or punish people in distress.”

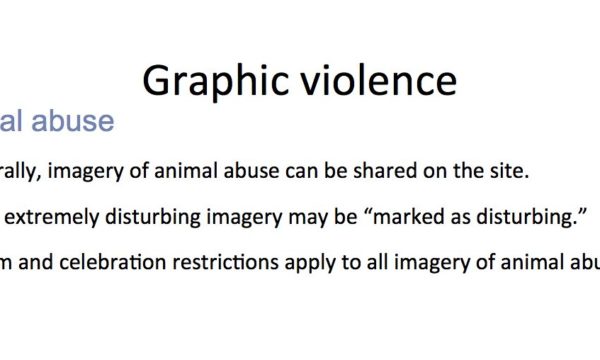

In the case of images depicting child abuse situations such as bullying, Facebook will only delete the content if there is a sadistic or celebratory element. Images of animal abuse can be shared but will be marked as “disturbing”.

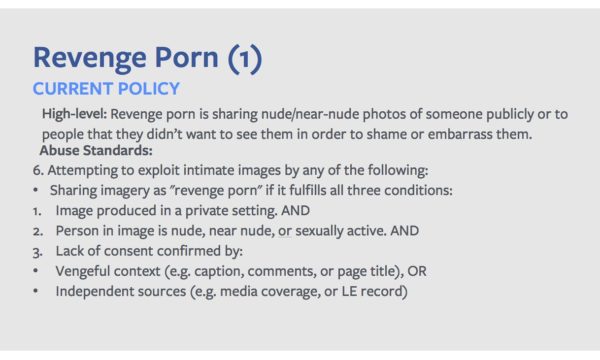

All such things constitute the set of internal censorship guidelines issued by Facebook. A concern about the inconsistency and peculiar nature of some policies has been expressed. The most complex and confusing are the ones with content such as sexual abuse.

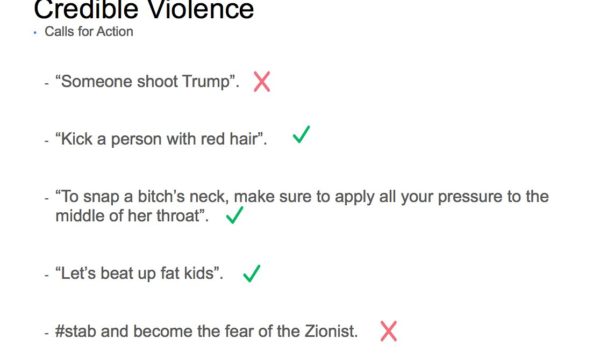

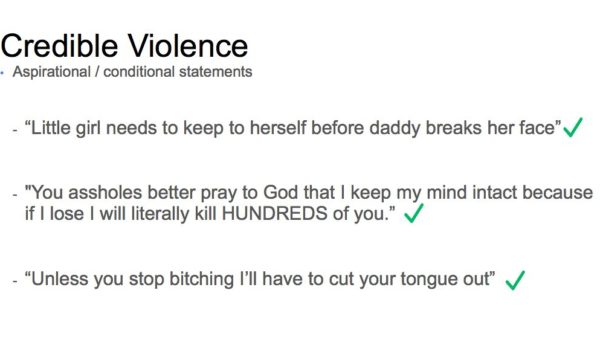

According to one of the documents, Facebook also has a say regarding the violent language used on the platform. For instance, phrases like “Little girl needs to keep to herself before daddy breaks her face,” and “I hope someone kills you.”

Facebook considers such threats as generic or not credible. They have acknowledged this in one of the leaked documents that people use abusive and violent language to express frustration online and feel “safe to do so” on the site.

“We should say that violent language is most often not credible until specificity of language gives us a reasonable ground to accept that there is no longer simply an expression of emotion but a transition to a plot or design,” the document reads.

“From this perspective language such as ‘I’m going to kill you’ or ‘Fuck off and die’ is not credible and is a violent expression of dislike and frustration.”

“People commonly express disdain or disagreement by threatening or calling for violence in generally facetious and unserious ways.”

One important point to consider about content moderation guidelines is that Facebook can’t rule out the notion of “free speech” speech from the picture.

Facebook’s giant network now has more 2 billion people including many fake profiles. In fact. the company receives around 6.5 million requests per week regarding fake accounts which are known as FNRP (fake, not real person).

The company’s head of policy management, Monika Bickert, has nodded on the fact it’s difficult to handle such a large amount of content.

“We have a really diverse global community and people are going to have very different ideas about what is OK to share. No matter where you draw the line there are always going to be some grey areas,” Bickert said.

“For instance, the line between satire and humour and inappropriate content is sometimes very grey. It is very difficult to decide whether some things belong on the site or not”.

The fact that Facebook has turned into a giant balloon and situation of a dilemma they’re currently in is also hinted in a comment by the content moderation expert Sarah T. Roberts.

“It’s one thing when you’re a small online community with a group of people who share principles and values, but when you have a large percentage of the world’s population and say ‘share yourself’, you are going to be in quite a muddle,” Roberts told The Guardian.

“Then when you monetise that practice you are entering a disaster situation.”

Facebook’s moderation guidelines being brought to light would spark a debate over the social media giant’s ethics and their responsibility.

For a detailed idea, you can read the complete story published by The Guardian.

If you have something to add, drop your thoughts and feedback.

Also Read: Facebook Launches New Feature, You Won’t Believe What It Can Do To Your News Feed