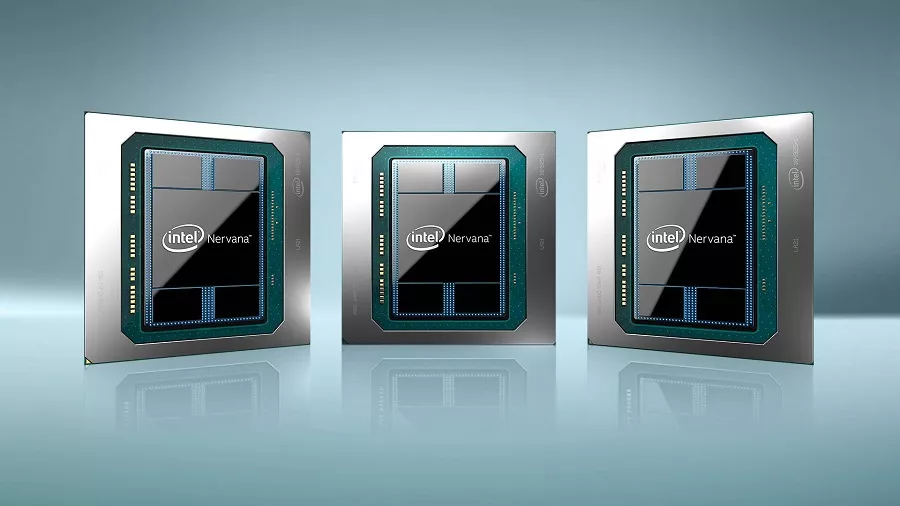

Intel Aims To Conquer AI With Its New Family Of AI Chip Nervana

Tech giants including Google, Facebook, and Apple are shifting their focus towards staying ahead of the line in machine learning. Intel plans to not be left behind in this AI race and level with Nvidia. “We are thrilled to have Facebook in close collaboration sharing its technical insights as we bring this new generation of AI hardware to market,” said Intel CEO Brian Krzanich.

Also read: What Is Intel Loihi? — A Neuromorphic, Self-Learning, AI Chip That Mimics Our Brain

According to Intel, NNP uses high-capacity, high-speed, and high-Bandwidth memory which will provide the maximum level of on-chip storage and a hyper-fast access to memory. Nervana includes bi-directional high-bandwidth links, enabling interconnection between ASICs. Its hardware also features separate pipelines for computation and data management, so new data is readily available for computation.

Intel has adopted a different memory subsystem by eliminating the cache architecture and managing all the on-chip memory through software. The software will determine the allocation of memory. Introduction of NNP will bring significant changes in parallelization of neural networks while reducing the power required for computations.

“We have multiple generations of Intel Nervana NNP products in the pipeline that will deliver higher performance and enable new levels of scalability for AI models,” said Naveen Rao, the CEO and co-founder of Nervana, which was acquired by Intel in August 2016. “We are gonna look back in 10 years and really see this was a pivotal point in the history of computation that processors change to focus on neural networks,” he added.

Last week, Intel unveiled its 17 Qubit Superconducting Test Chip For Quantum Computing and expect to deliver a 49-qubit chip by the end of 2017. What is your take on Intel’s recent developments? Share your views in the comments.

Source: Intel