Nvidia’s New Research Teaches Robots to Learn From Human

Nvidia has presented a new research work at International Conference on Robotics and Automation (ICRA) organized in Brisbane, Australia which involves teaching robots to learn from the tasks performed by Humans.

Until now, robots were trained to perform a set of instructions for a fixed number of times in an environment isolated from the very humans who programmed them. In the demonstration, Nvidia used some solid blocks of different colors and stacked them as per a fixed pattern to teach the robot to replicate the task.

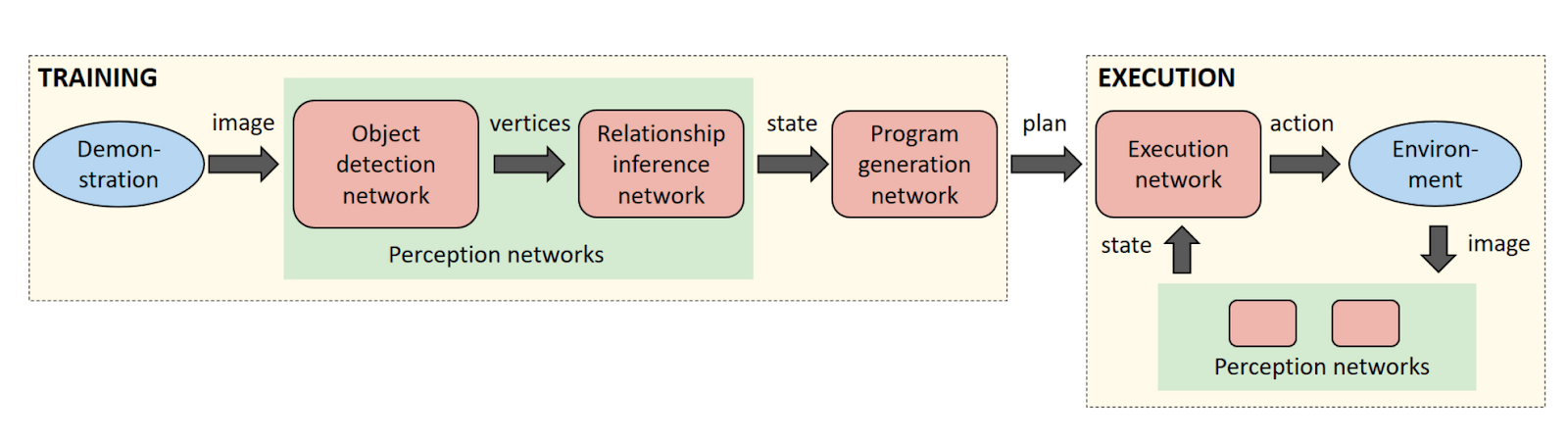

The sequence of neural networks trained in the demonstration was successful in inferring the relationship between blocks and developed an autonomous program to imitate the task performed.

The video released by Nvidia shows the system trained using Nvidia Titan X GPU learned from the single demonstration performed by a human being. Since the program refers to the current state of the events, therefore, it successfully corrected the mistakes committed while performing the tasks in real time.

“A camera acquires a live video feed of a scene, and the positions and relationships of objects in the scene are inferred in real time by a pair of neural networks. The resulting percepts are fed to another network that generates a plan to explain how to recreate those perceptions,” read Nvidia’s blog.

The research is aimed at cutting down the cost involved in reprogramming a robot for performing a set of tasks. “What we’re interested in doing is making it easier for a non-expert user to teach a robot a new task by simply showing it what to do,” said Stan Birchfield, the principal scientist involved in the research.

With this research, Nvidia has joined the league of companies including Google and SRI that are making advancements in the field of AI to develop AI-based systems that are intelligent and possess “common sense.”

Also Read: WoW: Nvidia And AMD GPUs Could See A 2x Performance Jump In Future