The Biggest Google I/O 2024 Announcements

At the recent Google I/O 2024 event, Google revealed several important updates. These include launching new Gemini and Gemma models, adding the latest AI features to Android, introducing a new AI voice assistant, and unveiling a tool that turns text into videos.

In December, Google launched its first natively multimodal model Gemini 1.0 in three sizes: Ultra, Pro, and Nano. Just a few months later they released 1.5 Pro, with enhanced performance. Both 1.5 Pro and 1.5 Flash are now in public preview on Google AI Studio and Vertex AI, featuring a 1 million token context window. Additionally, 1.5 Pro is accessible via a waitlist, offering a 2 million token context window to developers using the API and Google Cloud customers.

Google’s Project Astra aims to be an all-in-one virtual assistant called a multimodal AI. It’s designed to use your device’s camera to see and understand things, remember where your stuff is located, and perform tasks for you.

Google’s I/O Keynote Highlights: AI Over 120 Times

Sundar Pichai, keenly aware of the recent focus on AI technology, concluded a nearly two-hour series of presentations by querying Google’s Gemini model about the frequency of AI mentions. The tally stood at 120, with Pichai’s mention adding one more. Google is integrating the LLM-based platform into nearly all of its products and services, including Android, Search, and Gmail.

LearnLM represents Google’s latest AI models for educational purposes

LearnLM is a new family of AI models from Google designed to revolutionize education. Using LearnLM in a range of Google products, such as YouTube, Google Search, and Google Classroom, highlights its adaptability and effectiveness. Its integration likely enhances user experiences and functionality across these platforms, showcasing the potential of advanced language models to improve technology and learning environments.

Shortly, users will be able to customize their own chatbot accordingly. Google states that these chatbots will offer study guidance and interactive activities, such as quizzes and games, customized to each learner’s specific preferences.

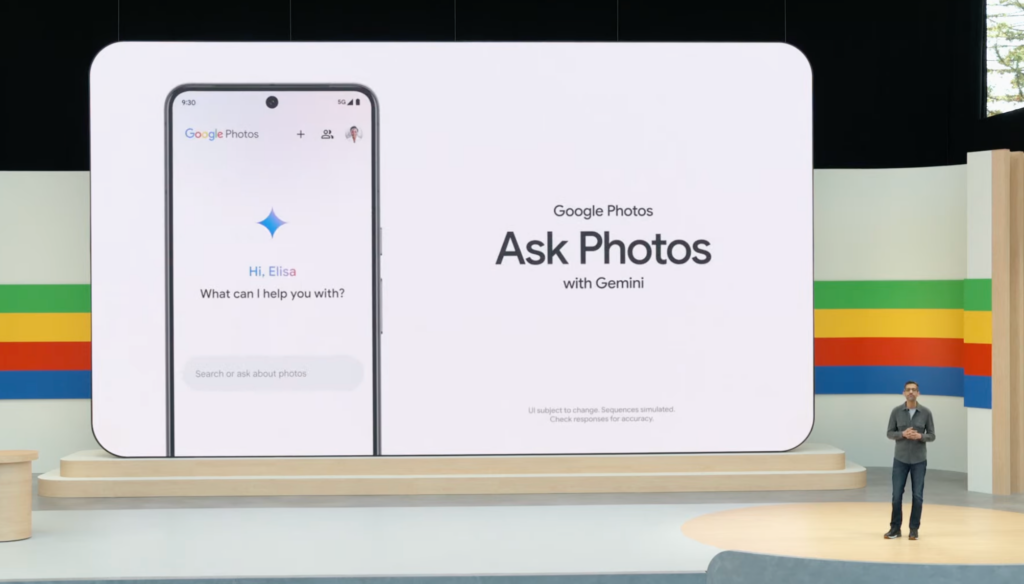

Introducing “Ask Photo”

This upcoming feature, available later this summer, will enable users to search through their Google Photos using everyday language. It taps into AI to understand the content of their photos and other details to make searching easier.

Consider this scenario as an example: You’re at the parking station, ready to pay, but you can’t recall your license plate number. In the past, you’d have to search through your Photos using keywords and then scroll through countless images spanning years to find license plates. Now, all you need to do is ask for photos. It can identify the cars that show up often, determine which one is yours, and provide you with the license plate number.

AI-generated quizzes

Google also introduced AI-generated quizzes, introducing a new conversational AI tool on YouTube. This tool enables users to ask any query in between by “raising their hand” while watching educational videos. These new features allow you to request the AI to summarize the video or explain its significance following its important topics.

The new features allow it to offer learners a more personalized and interactive experience. These additional features enable the platform to provide a more customized and engaging learning experience for users. By incorporating functionalities like personalized quizzes, conversational AI tools, and the ability to request summaries or explanations, learners can interact with the content in a way that suits their preferences and learning styles. This enhances engagement and comprehension, making learning more effective and enjoyable.

Detecting scams during calls

This upcoming call-monitoring feature, driven by Gemini Nano, is. a simplified version of Google’s advanced language model, aiming to notify users about potential scam calls as they happen and suggest ending those calls.

Firebase Genkit

Firebase Genkit is like a toolbox for developers that makes it easier to create, launch, and keep an eye on apps that use artificial intelligence (AI). The Firebase team will ensure that developers can seamlessly start using Genkit because it follows the same methods and principles as the other tools in the Firebase toolkit.

With Genkit, developers can first try out their new app features on their computers to see how they work in a controlled environment. Once they’re happy with how everything functions, they can then release their app for everyone to use with the help of Google’s serverless platforms, like Cloud Functions for Firebase and Google Cloud Run.

Gemini in Gmail

During one demonstration at I/O, Google showcased how a parent could utilize Gemini to summarize recent emails from their child’s school, allowing them to catch up on important information effortlessly. Apart from parsing the email content, the functionality will extend to analyzing attachments such as PDFs. The summary produced will cover essential points or actionable tasks. Within a sidebar integrated into Gmail, users can prompt Gemini for assistance in organizing receipts received via email.

Gemini can perform tasks such as categorizing receipts and transferring them to a designated Drive folder. Additionally, it can extract pertinent information from the receipts and populate it into a spreadsheet for further analysis or record-keeping purposes. According to Google, Gemini will have the capability to not only scan Gmail but also compose emails on your behalf.

Gemini 1.5 Pro and Gemini 1.5 flash

Gemini 1.5 Pro has enhanced various important functions, like translation, coding, and reasoning, with a series of quality improvements. It is designed to excel in tasks that require quick responses, particularly narrow or high-frequency ones. Both models are currently accessible for preview in over 200 countries and territories and will be officially available to everyone starting in June.

Updates on Gemma 2: Launching in June

A pre-trained Gemma variant described as the inaugural vision language model in the Gemma family, designed for tasks like describing images, labeling them, and answering questions about them. During Tuesday’s annual Google I/O 2024 developer conference, Google unveiled several new enhancements to Gemma, its collection of models. The Gemma models have been downloaded countless times across the various services where they’re offered.