ARM Wants To Put An AI Chip Everywhere It Can, Launches ‘Project Trillium’

There is hardly any mobile device left which doesn’t feature the technology of the British semiconductor giant, Arm Holdings.

Now, the company is moving forward into the world of artificial intelligence, following the likes of other technology companies determined to put AI chips in their products. The list includes Google, Apple, and the most recent one is Amazon, which is working on a custom processor to make Alexa faster.

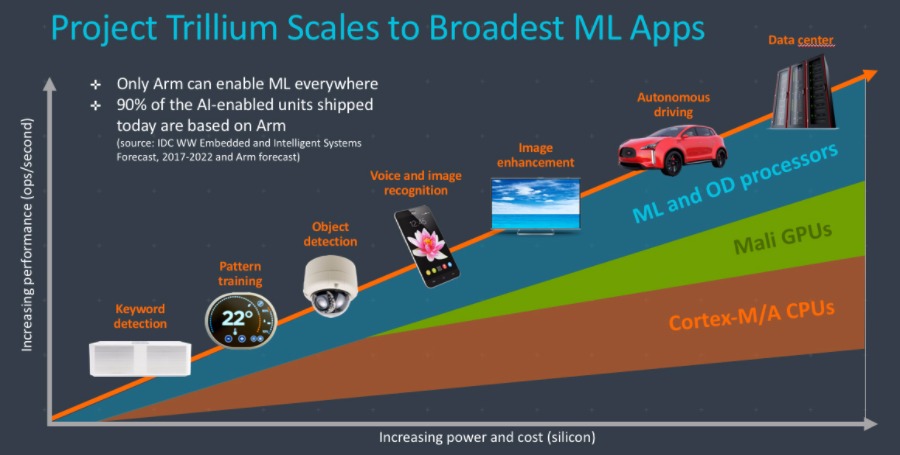

Arm has announced a new initiative called Project Trillium which provides two new processor architectures to give AI capabilities to even more devices.

According to the company, present day’s machine learning efforts are mostly focused on smartphones. Although the initial implementation of their project also revolves around mobile devices, in the future, it would be expanded to cover everything from sensors, smart speakers, cameras, IoT, etc.

Out of the two new processors, Arm ML processor is specially designed for machine learning leveraging Arm’s ML architecture. Built from scratch, it’s capable of running around 4.6 trillion operations per second (teraops) and makes efficient use of power for challenging machine learning tasks by delivering three teraops per watt.

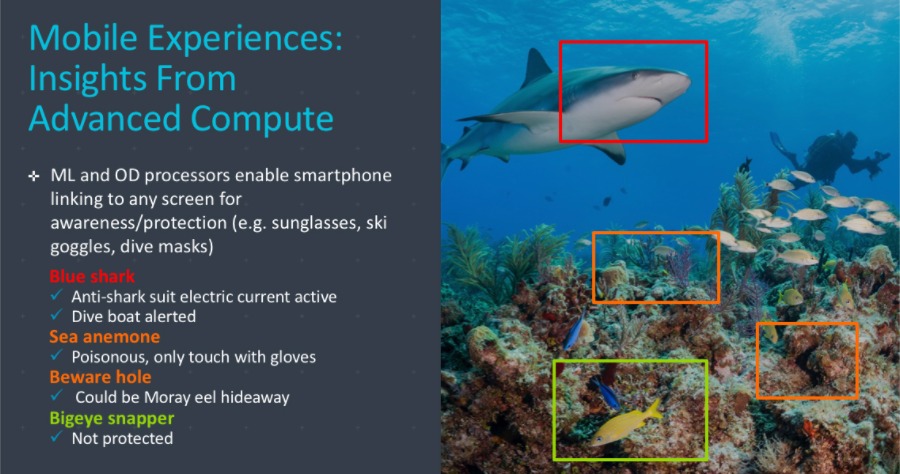

The other is the Arm OD processor which has object detection capabilities to identify people and objects; it can process out FHD videos running at 60fps. In fact, the OD processor is the second-generation of its kind; the first generation is already powering Hive Security Cameras.

Along with the processor, comes an Arm neural network software which the company promotes as a bridge between ARM-based CPUs, GPUs, and machine learning chips and well-known ML frameworks like TensorFlow, Caffe, and Android NN.

For the best possible implementation, Arm says OEMs should use the ML and OD chips in combination. For example, in a facial recognition system, the OD chip identifying human faces and the ML chip processing that data to do the actual face recognition–all in real time.

Project Trillium could be assumed as Arm’s efforts to give their machine learning and object detection architectures the same place as their Cortex CPU architectures have in the market.

Having onboard chips for AI processing also addresses a couple widely spoken concerns: privacy and security. The increase in the cloud-based processing of data is making many people uncomfortable. For instance, when every word that comes out of their mouth ends up on some cloud server, thanks to our digital assistants.

At the upcoming MWC 2018 in Barcelona, Arm will demonstrate early implementations of their object detection processor.

Also Read: Now Anyone Can Use Google’s Powerful AI Chips Called Cloud TPU