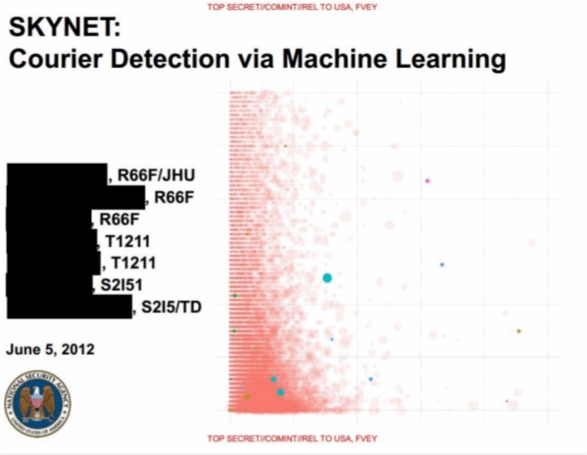

NSA’s SKYNET Algorithm May Be Killing Thousands Of Innocent People, Says Expert

Short Bytes: The NSA is known to study metadata to identify terrorists under its SKYNET program. An expert has recently analyzed some leaked documents and pointed out multiple flaws in the machine learning algorithm used to determine the possibility of a person being a terrorist. As a result, it’s possible that NSA could’ve killed thousands of innocents misclassified as “terrorists”.

Now, Patrick Ball, the executive director at the Human Rights Data Analysis Group and a data scientist, has described the NSA’s methods as “completely bullshit” and “ridiculously optimistic”. Talking to Ars, Ball told how a flaw in SKYNET’s machine learning algorithm could make the results very dangerous.

If we take a look at the NSA document leaks, they suggest that the machine learning program may have been deployed since 2007. Ball says that since then thousands of innocent people may have been wrongly labeled as terrorists and killed.

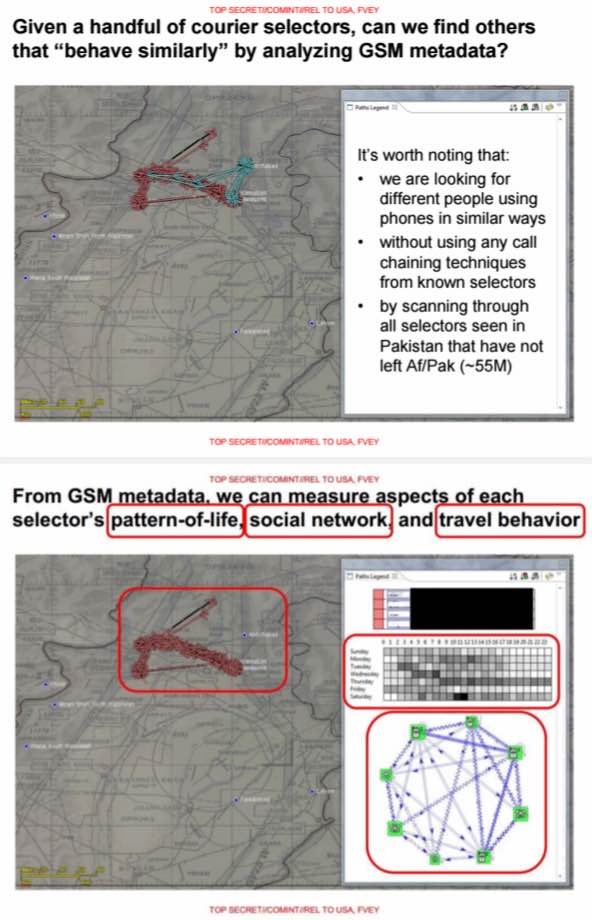

SKYNET collects metadata like dialled numbers and other call data like duration, time, who called whom and location of users. Surprisingly, users who switch off their phones or change SIM cards are flagged. SKYNET stitches all information together and makes an outline of a person’s daily routine using more than 80 different properties. The program uses an assumption that the behaviour of a terrorist is different from common people.

The algorithm is also fed the information of known terrorists in order to teach it to identify terrorists. “The problem is that there are relatively few “known terrorists” to feed the algorithm, and real terrorists are unlikely to answer a hypothetical NSA survey into the matter. The internal NSA documents suggest that SKYNET uses a set of “known couriers” as ground truths, and assumes by default the rest of the population is innocent,” writes Ars.

The report reveals that in 2012 out of 120 million cellular handsets in Pakistan, NSA analysed 55 million phone records. SKYNET’s classification then analyses metadata and assigns a score to each individual based on the metadata. The aim is to assign a high score to real terrorists and low score to common people. NSA does this using random forest algorithm which is commonly used in Big Data applications.

SKYNET now uses a threshold value above which someone is classified as a terrorist. The NSA slides suggest that 50 percent false negative rate is chosen to present the evluation result. So, what is done about the false positives?

“The reason they’re doing this,” Ball explains, “is because the fewer false negatives they have, the more false positives they’re certain to have. It’s not symmetric: there are so many true negatives that lowering the threshold in order to reduce the false negatives by 1 will mean accepting many thousands of additional false positives. Hence this decision.”

To test and train the model, data of a very few known terrorists is used. While the usual practice is to use a large data set, this NSA analysis fails to provide a good indicator of the quality of the method. While 0.008% failure rate is considered remarkably low for traditional business applications, the NSA’s false positive rate of 0.18% means the potential death of thousands of innocents misclassified as “terrorists”.

You can read the complete report of Ars Technica and share your views in the comments below.

Also Read: XKEYSCORE: NSA’s Search Engine to Hack Into Your Lives is as Simple as Google