Apple Intelligence Review: A Promising Start

When Apple took the stage a couple of months back, the AI features it announced seemed almost revolutionary, with perfect integration. Now that ChatGPT-enabled Siri, smart replies, and the object remover have arrived with iOS 18.1—you might wonder if these features are worth shelling out the money for. And how do they compare with the likes of Samsung and Google? After testing the new Apple Intelligence features for some time, here’s our review.

Siri: A Major Overhaul

When Siri first launched a decade ago, it was revolutionary. However, with time and a lack of updates, the voice assistant became redundant and incapable of handling the most basic tasks. With Apple Intelligence, Siri has undergone a significant overhaul, noticeable right from the new corner-glowing animation that adds a visually pleasing touch.

Performance-wise, Siri’s voice recognition has improved dramatically. It now processes natural pauses and filler words, like “umms,” for a smoother experience. One of the standout improvements is Siri’s ability to retain context. For example, if you ask, “What’s the weather like tomorrow?” and then follow up with, “How about this weekend?” Siri understands that you’re still asking about the weather.

ChatGPT-integration

Perhaps the best new feature of the new Siri is ChatGPT. When Siri doesn’t have an answer to a particular question, it pings ChatGPT. I asked difficult questions like “Can you suggest a five-course meal to help me reduce weight?” and the answers were quite good. It accurately gave me a list of dishes to try out. Also, iPhone users don’t need to sign up with OpenAI to access this feature.

Unfortunately, you only get a limited number of ChatGPT prompts every day. So, if you want to access ChatGPT more often, you’ll need to sign up for the Plus version, which costs $20 p/m.

Object Eraser & Photos

Like Google, Apple finally has an object eraser tool called Clean Up, which helps users to remove unwanted background objects from photos to create a less distracting look. The Clean Up feature worked pretty fine in my testing, with small objects behind a solid background. Unfortunately, the feature struggled when asked to remove bigger objects or photos with complicated backgrounds.

Furthermore, unlike Samsung’s implementation, which allows users to add objects through AI-generated prompts, Apple’s tool is limited to removing objects.

The Photos app also received a major AI upgrade. It now allows users to search for specific images using natural language. This was particularly helpful in locating a specific beach photo where I was facing away from the camera—a shot I wanted to share on Instagram.

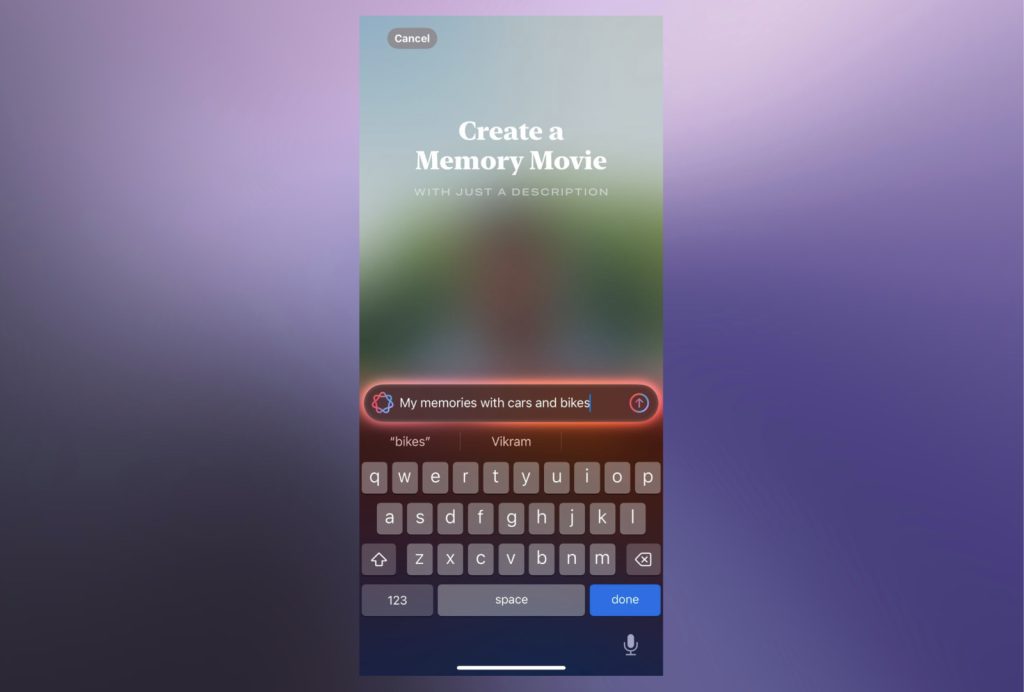

Additionally, the Photos app can now create “Memories” based on different prompts. While it worked well for broader topics, like “cars and bikes,” it struggled to retrieve accurate results for prompts like “best food I ate in Delhi.”

Image Playground

Apart from writing, AI-generated images are the next headline feature of artificial intelligence, and Image Playground is no different. The feature allows users to upload their images and choose their desired look based on different presets.

I got access to the feature after a week on the waiting list, and the experience was better than I thought. Of course, the images are cartoonish and sometimes can be a bit weird, but if all you want is a fun photo, then Image Playground works surprisingly well.

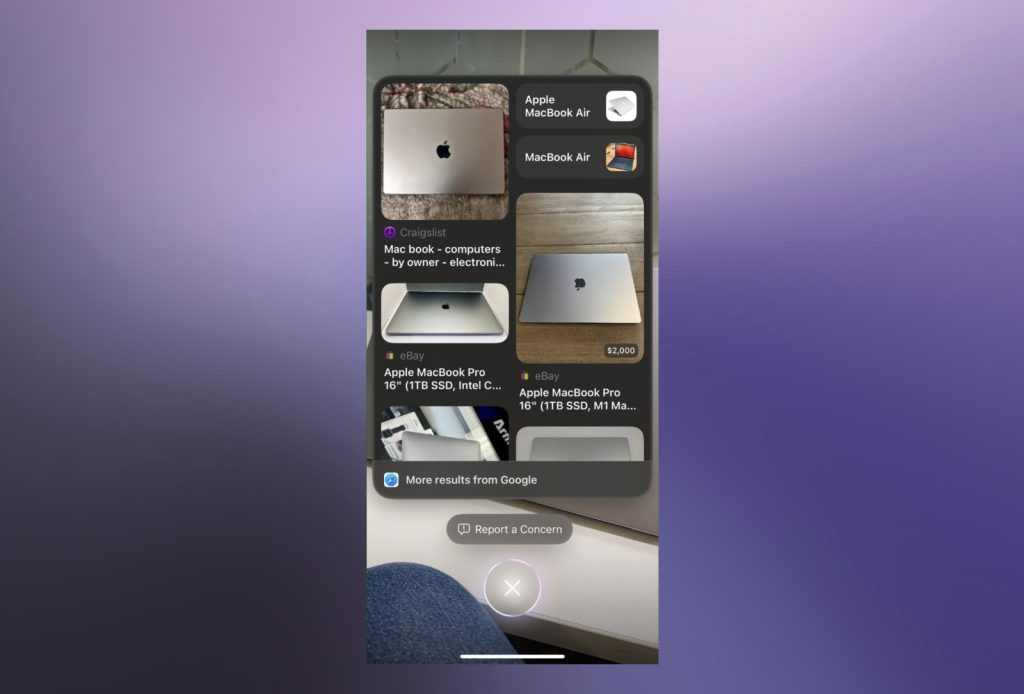

Visual Look Up

We’ve all been on a trip to a new place, spotted a beautiful building or flower, and wondered, “What is that?” If you often find yourself in this situation, Visual Look Up is a great addition. The tool lets you identify plants, animals, landmarks, and more directly from your photos.

Simply take a picture or view an existing one, then tap the information icon. In my testing, the feature works similarly to Google Lens and gives accurate responses to almost every query.

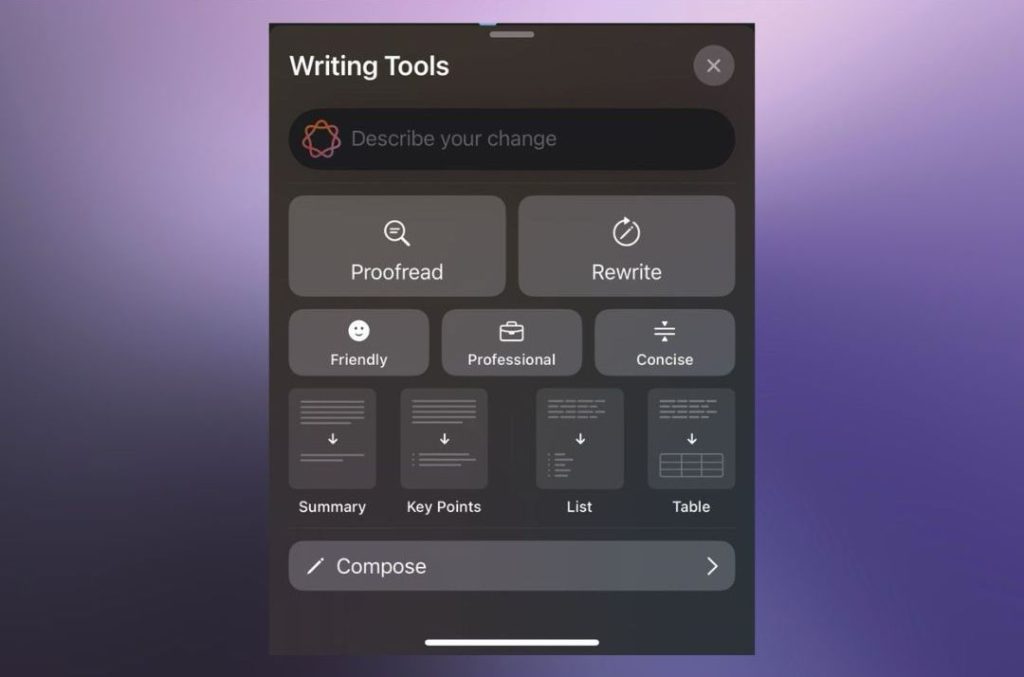

Writing Tools

One of the most practical uses of generative AI is helping to rewrite texts and emails. With the new Writing Tools, Apple now allows users to rewrite, proofread, and summarize text without relying on third-party apps. To do so, highlight the text, select an option, or type your custom prompt.

I tested this feature when sending a formal invitation to one of my colleagues about a Diwali party, and it worked surprisingly well. The tone was correct, there were no contextual mistakes, and the message was accurately conveyed.

Message Summaries

Notification management has been an Achilles’ heel for many iPhone users. But that changes with Message Summaries. As the name suggests, this feature condenses notifications into concise overviews, letting users grasp the main points without opening each message.

This feature has been super helpful in understanding the context of long messages and emails. However, it is important to note that while the summaries work well with short—to medium-length conversations, they do get confused with longer contexts.

For example, I received an event invitation and an RSVP for a colleague and me. The event manager later informed me that the venue might change, but when I checked the summary, it incorrectly stated that the event had been canceled.

Conclusion

Apple Intelligence is a big leap forward in terms of AI adoption by the everyday consumer. However, with its current implementation, it’s clear that It is still in its early stages. Features like Image Clean Up, message summaries and photo collages still require polishing.

That being said, Apple Intelligence features like Visual Lookup, writing tools, and the new ChatGPT enabled Siri to work surprisingly well and help improve the overall smartphone experience.