Google Open Sources AI Code That Makes Pixel 2’s Portrait Mode So Amazing

As the first notable flagship of 2018, Samsung Galaxy S9 brought the first-of-a-kind variable camera to the smartphone industry. However, Google’s Pixel 2 camera remains one of the best ones around–especially due to its intelligent portrait mode that doesn’t need dual camera setup.

To make sure that the developers can take advantage of the underlying technology powering the camera, Google has open sourced the same via its TensorFlow AI framework.

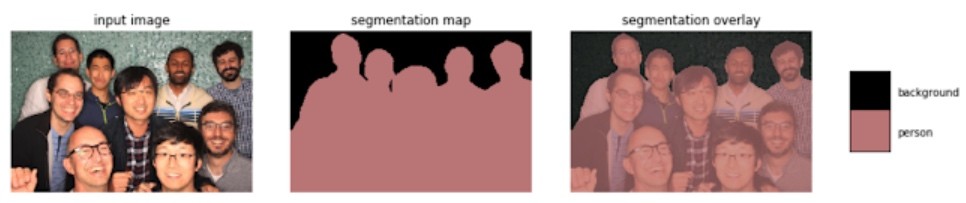

Called DeepLap-v3+, this “semantic image segmentation model” can be used to assign image labels to every pixel in the image that describes if that pixel is part of a person, a dog, a cat, etc. With this technology, Google identifies and differentiates objects on a pixel level and blurs the background.

As per the official blog post, DeepLab-v3+ models are based on top of a powerful convolutional neural network (CNN). The company has also shared its Tensorflow model training and evaluation code.

Please note that this open source release doesn’t mean that all the future smartphones will be able to replicate what Google’s doing with its portrait mode. The company has shared a technology that’s behind all this, and the developers will need to use their own expertise and use the code. This, however, opens up the possibility of newer and exciting applications in object detection and other fields.

Let’s see how does open source community respond to the DeepLab-v3+ release and create amazing things using the same.

Also Read: Top 10 Google Open Source Projects You Must Know