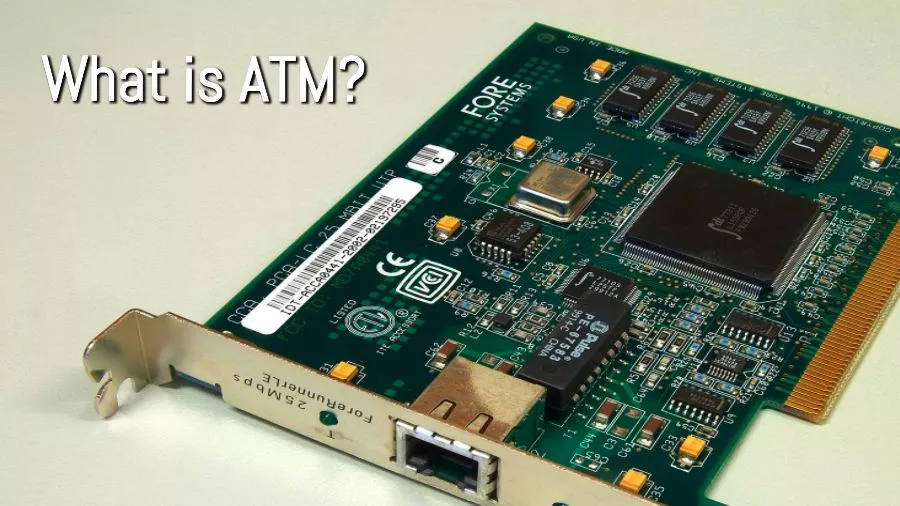

ATM In Computer Networks: History And Basic Concepts

History of ATM

Let’s have a look at why the concept of ATM was developed at first hand itself. In the 1990s, the mobile data carrier speed along with the internet speed saw a boom in the transfer rate. On the other hand, other internet technologies such as voice call and video calls had also started to come into the play. So, in a nutshell, it was not only the internet world but also the telephony world which were converging into each other. Thus networking QoS factors such as latency, jitter, data rate, real-time data delivery, etc., became more important.

Nonetheless, the underlying protocols were still the same across the most of the internet world including hardware, protocols, and software. On top of that, there was not any solid concept or technology which, on the telecommunication level, can address the then-lately arising issues at the same time. So, the ATM was born.

Basic ATM concepts

Here are some of the fundamental ATM concepts which are used most often:

Cells

The most basic data transfer units are called cells in the ATM technology. While on the data link layer the data transfer units are known as the frames, the data transfer unit for the ATM concepts was named cells. A cell size is 53 bytes, out of which 5 bytes will be header size and rest of the 48 bytes will be the payload. That means around 10% of the packet size is the header size.

For example, if we transfer 53 GB of data using the ATM technology out of 53 GB, 5 GB will be just the header. To process 5 GB per 53 GB, the processing technology has to be scalable and fast enough to do the job. Can you guess how does ATM address it? Otherwise, keep on reading…

Because of the fixed and smaller sizes (53 Bytes), it was easier to make simpler buffer hardware. But, there was a lot of overhead in sending small amounts of data. Moreover, there was segmentation and reassembly cost as well because of the higher share of the packet header and overhead in processing them.

ATM Layers

The whole ATM concept, just like the OSI system, was also divided into different layers. Each layer was assigned some work to do just like each layer of the OSI system did. We will describe the same later in the article.

Class of services

As discussed above, ATM was introduced to address the problem with the real-time data and data latency problem. A set of class of services was also introduced which were based on certain factors such as bit rate (whether constant or variable), timings (how strict the timing sync would be in between the source and destination) to facilitate users with different requirements, etc. The different class of services has also been mentioned ahead in the article.

Asynchronous Data Transfer

ATM uses Asynchronous data transfer while utilizing statistical multiplexing, unlike circuit-switched network where the bandwidth is wasted when there is no data transfer taking place.

Connection Type

However, it uses a connection-oriented network for data transfer mostly for the purpose of Quality of Services. ATM operates by setting up a connection with the destination using the first cell and the rest of the cells follow the first cell for the data delivery. That means, the order of the packets is guaranteed but not the delivery of the packets.

This connection is called virtual circuit. This means that from the source to the destination, a path has to be found first before the data transfer is initiated. Along the path, all the ATM switches (devices) allocate some resources for the connection based on the class of services which has been chosen by the user.

Transmission Medium

As mentioned before, the main purpose of introducing the ATM concept was to make all types of data transmission independent of a medium. That means, it could work on the telephony as well as on the internetworking side as well. The transmission medium could be a CAT6 cable, a fiber cable, a WAN and even packaged inside payload of other carrier systems. With such flexibility of data transfer, ATM was supposed to give a quality service in the most of the cases.

Being independent of a medium also brought a lot of problems with the ATM technology, which were addressed at the convergence layer.

Some of the other interesting facts that arise out of the situations mentioned above are —

- ATM can also work on CBR (Constant Bit Rate) as well as VBR (Variable bitrate) because it has flexible data transmission efficiency.

Quality of Service and Service categories in ATM:

Quality of Service in ATM technology is handled in different ways based on a certain number of criteria. Based upon it, different service categories have been introduced such as Available Bit rate, Unspecified Bit Rate, Constant Bit Rate, Variable Bit Rate, etc.

ATM Layers

Just like the OSI model, ATM protocol stack has also been divided into three layers with different assigned functionalities:

- ATM Adaptation Layer (AAL)

- Convergence Sublayer (CS) and,

- Segmentation & Reassembly Sublayer (SAR)

- ATM Layer and,

- Physical Layer

- Transmission Convergence Sublayer (TS) and,

- Physical Medium Sublayer

ATM Adaptation Layer (AAL)

The main function of the adaptation layer is mapping apps to the ATM cells. If you are well aware of the different layer of the OSI system, then you can relate adaptation layer with the application layer of the OSI model.

Besides, the Adaptation layer is also responsible for segmentation of the data packets and reassembling them at the destination host.

Convergence sublayer

We have already seen that the ATM concept was introduced to handle different data types along with variable transmission rate. It is the convergence layer which is responsible for these features.

In easier terms, convergence layer is the layer on which different types of traffic such as voice, video, data, etc., converge.

Convergence layer offers different kinds of services to different applications such as voice, video, browsing, etc. Since different applications need different kinds of data transmission rate, convergence sublayer makes sure that these applications get what they need. A few examples of services offered by the convergence sublayer are here –

CBR (Constant Bit Rate)

CBR provides a guaranteed bandwidth and it is also suitable for real-time traffic. In the CBR mode, the user declares the required rate at the beginning of the connection set up. So, accordingly, the resources are set for the source at different hops or stations. Besides the declared required rate, the user is also guaranteed delay and delay variations.

ABR (Available Bit Rate)

Suitable for bursty traffic and getting feedback about the congestion. In ABR mode, the source relies very much on the network feedback. If bandwidth is available, then more data can be transferred. It helps in achieving maximum throughput with minimum loss.

UBR (Unspecified Bit Rate)

UBR is a cheaper and suitable solution for bursty traffic. In UBR transfer mode, the user does not specify the network about the kind of data and bitrate it is going to utilize.

Thus, the source sends the data packets whenever it wants. However, there are also certain disadvantages to it such as there is no feedback about the sent data packets. As a result, there is no guarantee that the packet will reach its destination. Also, if there is congestion in the network, then cells might be dropped as well.

VBR (Variable Bit Rate)

In the VBR mode, the user needs to declare the maximum and the average bit rate at the beginning. Also, VBR works in the real time as well as non-real time basis.

For example, VBR can be used for the real-time services such as video conferencing. On the other hand, it can also be used for the non-real time services as stored video buffering.

AAL Types & Services offered in details:

Here is a table of the services offered under different AAL types:

Segmentation and Reassembly Sublayer (SAR)

As the name suggests, SAR layer is responsible for segmenting higher layer data into 48 Bytes cells. At the receiving end, the same layer reassembles the data.

When ATM was created, it was envisioned that this technology will be scalable and would support very high speed. To achieve it, the cell size was kept of around 48 Bytes so that segmentation and reassembly could be done faster. Moreover, it also employes ASIC (Application Specific Integrated Circuit) chip in the ATM switch to achieve this.

ATM Layer

- ATM layer is akin to the network layer of the OSI layer although it also has the data link properties.

- To set up a connection, the ATM layer uses Globally Unique Address. ATM does not IP system.

- Path and circuit identifiers are used once a connection has been established.

Why did ATM technology not survive?

You have had enough about ATM technology and the question “Why did ATM technology not survive then even if it was so efficient and advanced?” must have crossed your mind till now.

Even though the ATM addressed a lot of issues and offered a la carte solution to the users need, there were a lot of other things that could not let ATM technology compete for a longer time. Here are some of the reasons:

The speed of the device operation:

Much of the ATM devices operated at a different speed than what we see around. For example, OC3 operated at around 155 Mbps and OC12 operated at 622 Mbps.

Costly & complex Hardware

Because both the telephony and the internet industries were involved, the standardization of this technology took too long. With the time, it also grew too complex, resulting in the sophisticated hardware which was too costly compared to normal computer networks devices around. For example, ATM switches were more expensive as compared to Ethernet switches which work at layer 2.

Non-IP based addressing system

Making the situation worse, most of the networking software and hardware available in the market were based on the IP-based networks but not on the ATM-based networks. So, in the market, not much software was available for ATM. Also, no one wanted to move to ATM by investing a lot of money in buying new hardware.

Delay

Last but not the least, there was too much delay involved in the standardization of the protocols. Also, many leading computer networks companies tried to come up with their proprietaries to influence the market.

Bringing all the companies and working groups (including research and study groups) from telephony as well the internet world delayed the whole standardization process.

Gradually with the time, because of the major issues mentioned above and other minor issues, ATM phased out of the market.

If you are more interested in the similar topics, here is our coverage on computer networks.