Intel & Facebook Are Collaborating To Design AI Deep Learning Processor

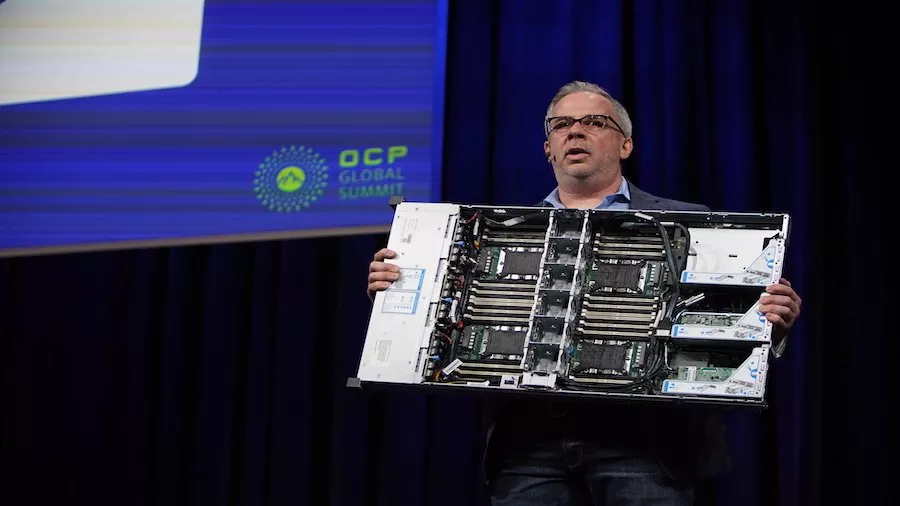

Intel announced at the Open Compute Project Global Summit 2019 that it is collaborating with Facebook to design Cooper Lake 14nm Xeon processor family. Jason Waxman, Intel’s VP of Data Center Group and GM of the Cloud Platforms Group, announced the partnership in a keynote where he also announced new open hardware advancements.

Jason said that Facebook is helping Intel in featuring Bfloat 16 format in Cooper Lake’s design. Bfloat 16 is a 16-bit floating point representation system that is useful in deep learning training. It improves the performance of the processor by providing the same dynamic range as offered by 32-bit floating point representations. Bfloat 16 accelerates AI deep learning training in different tasks like image-classification, recommendation engines, machine translation, and speech-recognition.

Intel will introduce these platforms with four and eight-socket designs from the currently used two-socket design. The upcoming Xeon Scalable processors having a four-socket server are speculated to feature up to 112 cores and 224 threads. Also, the processors will be capable of addressing 12Tb of Intel’s DC Persistent Memory Modules (DCPMM).

Intel’s Cooper Lake processors will compete with the AMD EPYC lineup, which is based on 7nm architecture.

Despite having 112 cores and 224 threads, Cooper Lake is still trailing behind AMD EPYC CPUs that have 128 cores and 256 threads on a dual-socket motherboard.

Also Read: Intel Comet Lake Processors To Feature Up To 10 Cores: Linux Support List