Garena Free Fire Max Redeem Codes for June 27

Garena Free Fire Max is one of the most popular games on the planet, and for…

As our tagline “Fresh Bytes of Technology” implies, our team works tirelessly to deliver the latest updates from the tech world in a crisp and easy-to-follow format. Follow us on different social media channels as well as Google News to keep yourself updated with the latest knowhow of tech giants, security/privacy, smartphones, operating systems, and latest innovations in the field of electric vehicles and artificial intelligence.

Garena Free Fire Max is one of the most popular games on the planet, and for…

Vivo has launched the T4 Lite 5G in India, adding strength to the company’s budget T-series…

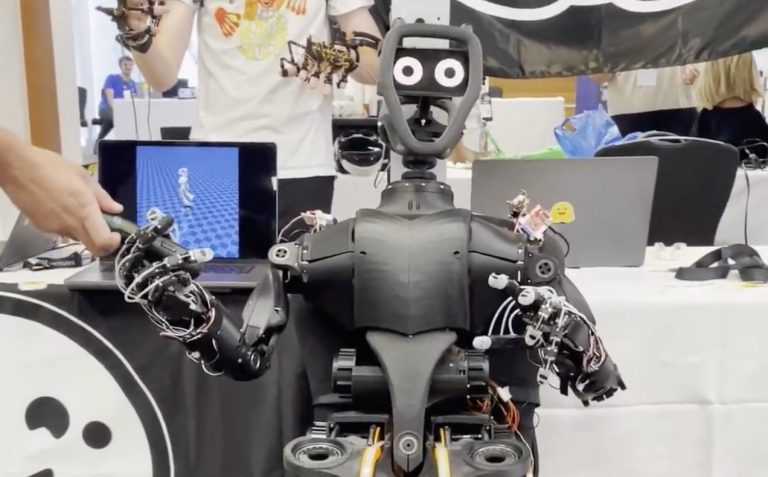

Robotics has become a logistics game-changer, where speed and accuracy are paramount. Figure AI’s recent innovations…

The hospitality sector is embracing a tech revolution with the introduction of the Zerith H1 by…

Gaming laptops have come a long way, but the Asus ROG Strix G16 is heading straight…

Hugging Face, an artificial intelligence and machine learning company, is entering the robotics space with two…

Looking at how the business environment is set up today, choosing the right Business Process Management…

There are many reasons not to invest in a robot vacuum cleaning system, but surprisingly, for…

While the Tesla Optimus is often regarded as the most advanced humanoid robot, UBTech, a Chinese…

HP has introduced the OmniStudio X All-in-One (AIO) PC in India. It is a stylish 32-inch…